The objective of the TRADR project is to enable a team of humans and robots to collaborate in a disaster response scenario which can last over several days. To achieve this, one of the core capabilities of the robots is their capacity to create a 3D map of their environment and to localize themselves within this map.

The TRADR consortium has recently open-sourced three libraries with the purpose of enabling robots equipped with 3D laser scanners to perform the above mentioned tasks. The curves library is used to represent the robot’s continuous-time trajectory in 3D space while the laser_slam library implements the back-end estimation functionalities of the localization and mapping system. The SegMatch library finally enables the robots to recognize previously visited places and to transmit this information to the back-end in order to close loops and to register trajectories of different robots.

Links to libraries:

https://github.com/ethz-asl/curves

https://github.com/ethz-asl/laser_slam

https://github.com/ethz-asl/segmatch

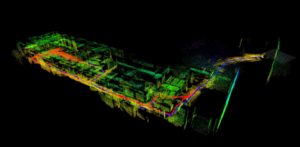

The following figure illustrates a map which was generated by fusing 3D laser scanner measurements from two unmanned ground vehicles which were collected during the TRADR Evaluation exercise at the Gustav Knepper Power Station in Dortmund, Germany. The map is coloured by height and the robot trajectories are represented as blue and red lines.

For more information about the place recognition algorithm please consult our paper (https://arxiv.org/pdf/1609.07720v1.pdf) and have a look at our video (https://www.youtube.com/watch?v=iddCgYbgpjE). Easy to run demonstrations can be found in the wiki page of the SegMatch repository. More to come!